Children under 18 using Facebook and Messenger will automatically be given ‘teen’ accounts, Meta has announced.

The changes follow a similar move for Instagram last year, and are intended to protect young users after high profile concerns that social media platforms are harming their mental health.

Meta said that the updates have ‘fundamentally changed the experience for teens’ and announced today that restrictions will go further, with users under 16 automatically blocked from going live without parental permission.

Users in the UK, the US, Canada, and Australia will be the first to get access to the new teen accounts across Facebook and Messenger too.

But questions remain about whether the platforms are safe for young users at all, with the Molly Rose Foundation calling for more transparency on what sensitive content is screened out by the teen accounts.

Meta says the rollout so far has been a success, with at least 54 million teens on the new accounts so far and more to come as the updates become available in more regions.

Of those using them, 97% have kept the default restrictions on.

What is different about teen accounts?

Users under 18 will automatically be placed into accounts with stricter content moderation, and more restrictions. These include:

- Sleep mode will be turned on between 10pm and 7am, which will mute notifications overnight and send auto-replies to DMs.

- Suspected nudity will be automatically blurred in DMs.

- Teens will not be able to go live without parental permission.

- They will have the strictest limits on what content they see (such as content that shows people fighting or promotes cosmetic procedures) in places like Explore and Reels.

- Anti-bullying feature, Hidden Words, will be turned on so that offensive words and phrases will be filtered out of teens’ comments and DM requests.

- Accounts automatically set to private, meaning they need to accept new followers and people who don’t follow them can’t see their content or interact with them.

- Can only be messaged and tagged by people they follow or are connected to.

- Reminder to leave the app after 60 minutes each day.

- Those aged 13-15 years old are in the strictest settings and cannot change these without parental permission.

- Users aged 16-17 year olds also have Teen Accounts automatically but can change settings if they want to .

Meta said: ‘We know parents are worried about strangers contacting their teens – or teens receiving unwanted contact.

‘We’ll make these updates available in the next couple of months.’

Should teens under 16 use social media at all?

But Andy Burrows, CEO of the Molly Rose Foundation, said: ‘Campaigners and parents will be understandably sceptical whether today’s announcement Is just another Meta PR gimmick.’

‘Eight months after Meta rolled out Teen Accounts on Instagram, we’ve had silence from Mark Zuckerberg about whether this has actually been effective and even what sensitive content it actually tackles.’

‘It’s appalling we still don’t know whether Teen Accounts will protect teenage girls from being algorithmically recommended corrosively harmful depression posts, content that describes women as ‘property’ and gay people ‘mentally ill’.

‘Parents will be understandably alarmed that Meta has chosen to dodge these basic safety questions.’

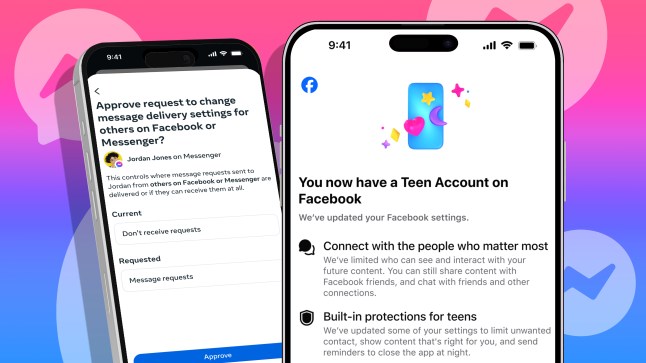

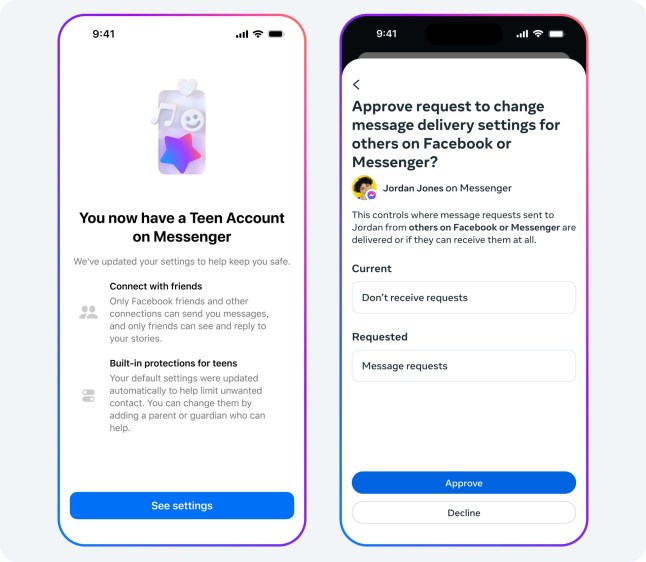

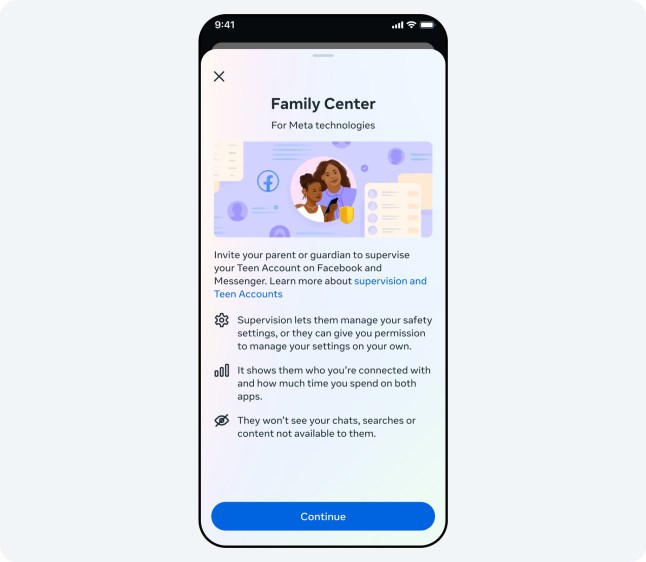

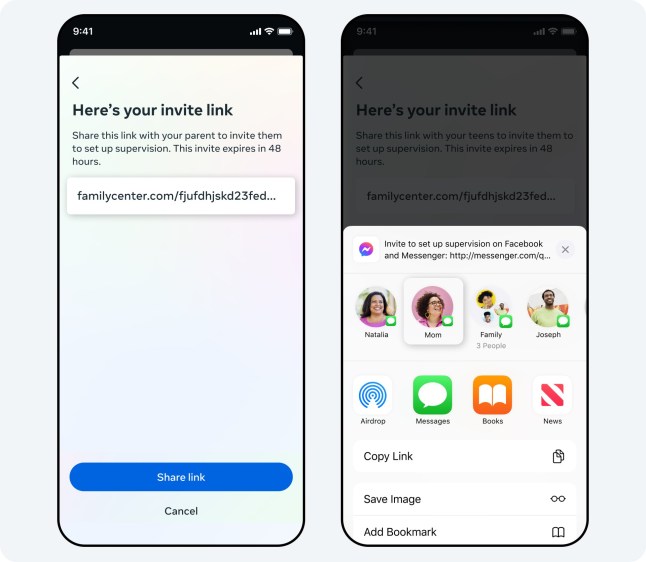

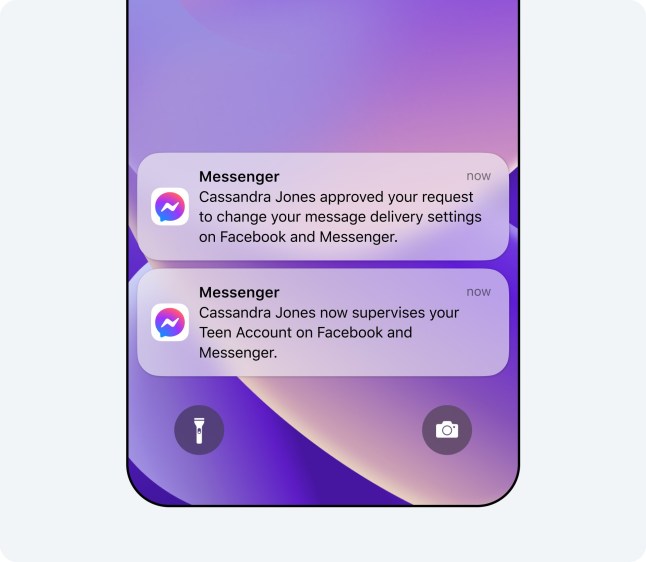

Here’s how the new accounts will look on Facebook and Messenger…

Speaking when the changes were first announced last September, Instagram boss Adam Mosseri said the changes could give parents ‘peace of mind’ about what their children were accesing on online.

‘I’m a dad and this is a significant change to Instagram, and one that I’m personally very proud of,’ he said.

Why are teen accounts needed?

There have been several cases of children who died after being exposed to harmful material online, leading to high profile campaigns for better protections. Among them are:

Molly Rose Russell, aged 14

Molly took her own life aged 14 in November 2017 after viewing suicide and other harmful content on Instagram and Pinterest.

A coroner ruled the schoolgirl, from Harrow in north-west London, died from ‘an act of self-harm while suffering from depression and the negative effects of online content’.

In a hearing which put tech giants in the spotlight, Andrew Walker said material she was consuming in the lead-up to her death ‘shouldn’t have been available for a child to see’.

Tommie-Lee Gracie Billington, aged 11

The youngster lost consciousness after ‘inhaling toxic substances’ during a sleepover at a friend’s house on March 2, before later dying in hospital.

His death was believed to have been linked to a social media trend called ‘chroming,’ which involves inhaling toxic chemicals such as paint, solvents, aerosols, cleaning products or petrol.

His grandmother Tina blamed TikTok for his death, saying: ‘We don’t want any other children to follow TikTok or be on social media. In fact, we want to get TikTok taken down and no children to be allowed on any social media under 16 years of age.’

Isaac Kenevan, aged 13

The schoolboy is believed to have died after taking part in a ‘choke challenge’ he had seen on social media.

His mum Lisa has spoken out to say how her son’s inquisitive nature made him vulnerable to harmful content online.

Last week, the BBC reported that Meta and Pinterest are understood to have have made ‘secret’ donations to a charity set up in memory of 14-year-old Molly Russell.

The foundation’s latest annual report refers to grants received from donors who wish to remain anonymous. These payments are believed to have been made by Meta and Pinterest since 2024 and are expected to continue over a number of years.

Details of the amounts have not been made public, and it is understood the Russell family have not received any money from the donation.

Leigh Day solicitors issued a statement on behalf of the family: ‘Following the coroner’s inquest into Molly’s death, we have decided that we will pursue the aims we share with Meta and Pinterest through the Molly Rose Foundation to help ensure young people have a positive experience online, instead of pursuing legal action,’ it said.

‘We, Molly’s family, have always made clear that we would never accept compensation consequent upon Molly’s death.’

In January, as part of sweeping changes to its policies, Meta boss Mark Zuckerberg said the social media giant would stop using fact checkers to proactively scan for harmful content to boost free speech and reduce ‘censorship’.

Yesterday, this change took effect in the US, with fact checking moving to a ‘community notes’ style of self-policing by users.

The Molly Rose Foundation previously warned that Meta’s changes could place young people at greater risk of encountering harmful content online.

Get in touch with our news team by emailing us at webnews@metro.co.uk.

For more stories like this, check our news page.

MORE: Trans woman spends almost three years documenting her transition with 900 selfies

MORE: The ‘icky’ baby naming mistakes parents make all the time

MORE: Despise phone calls? Psychologist shares three ways you can beat the fear for good